We live in interesting times. The technological revolution has hit a fever pitch of new advancements that have improved productivity and quality of life worldwide. While work is still left to be done, poverty and hunger are at the lowest levels in the history of the world. For many, our time has been liberated for recreational activities and to pursue passions and creative endeavors that would have been unheard of even a few decades past.

It has been many years since I’ve been charged with writing poetry. When it comes to newfound free time, I’m more of a music guy myself. So when my 17 year old son came to us last week, vexed about a poetry project for a college-level English composition class, I didn’t expect to be much help. The assignment was fairly specific, with required length, meter, and style — well defined, unlike many software projects I’ve had to architect and develop over my career.

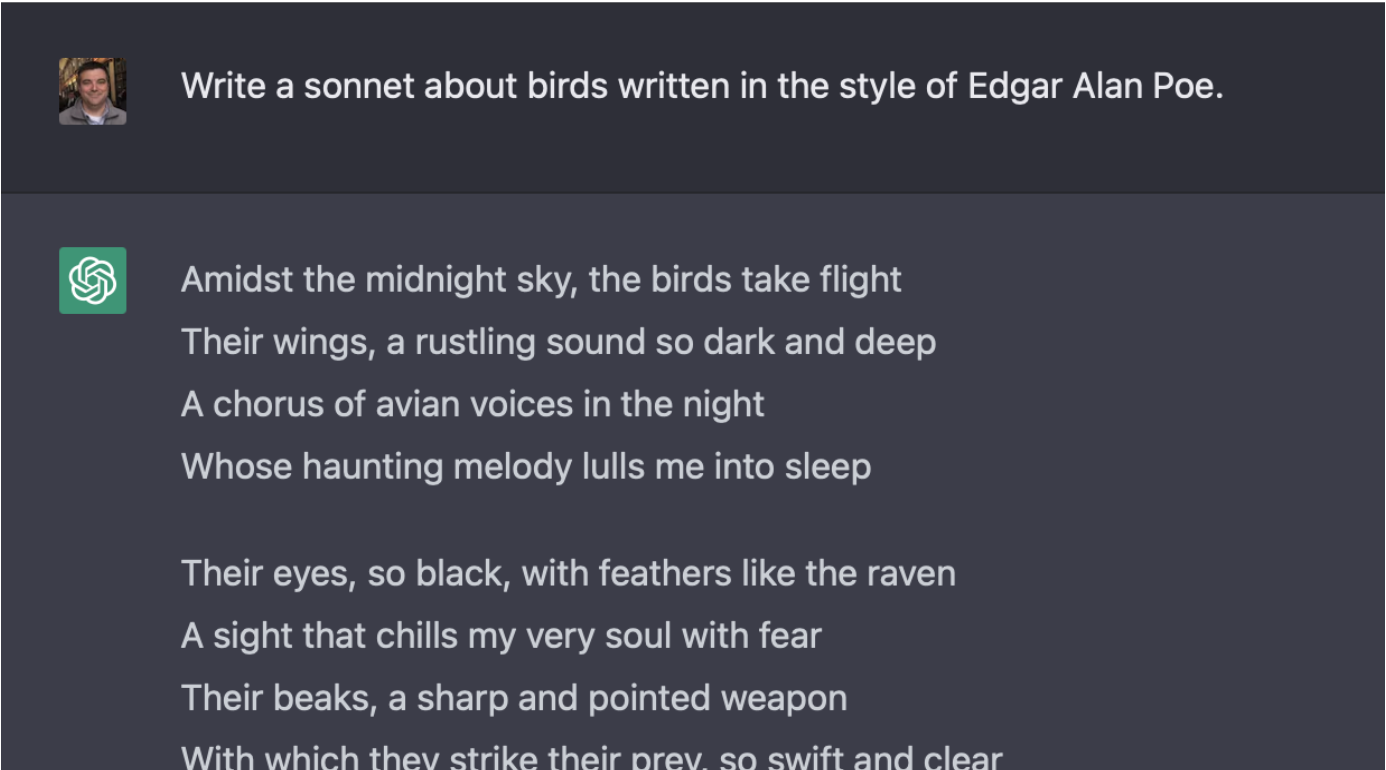

My initial assumption was that my son had inherited my more analytical, less creative writing style and had done poorly. Instead, his frustration was with competing with those in his class who had openly admitted to plagiarizing their submissions in clear violation of school policy — representing work of another as their own without crediting the original author. Of course, cheating in school is probably as ancient as the very first schoolroom. What shocked me was the claim that a substantial number of the students used ChatGPT to write their poems — and the professor was fully unaware. I don’t live in Silicon Valley, where even the kindergartners are working on their first IPO, but in a more rural area of the country. Advancements in technology clearly are not limited by geographic bounds or nearness to technology centers.

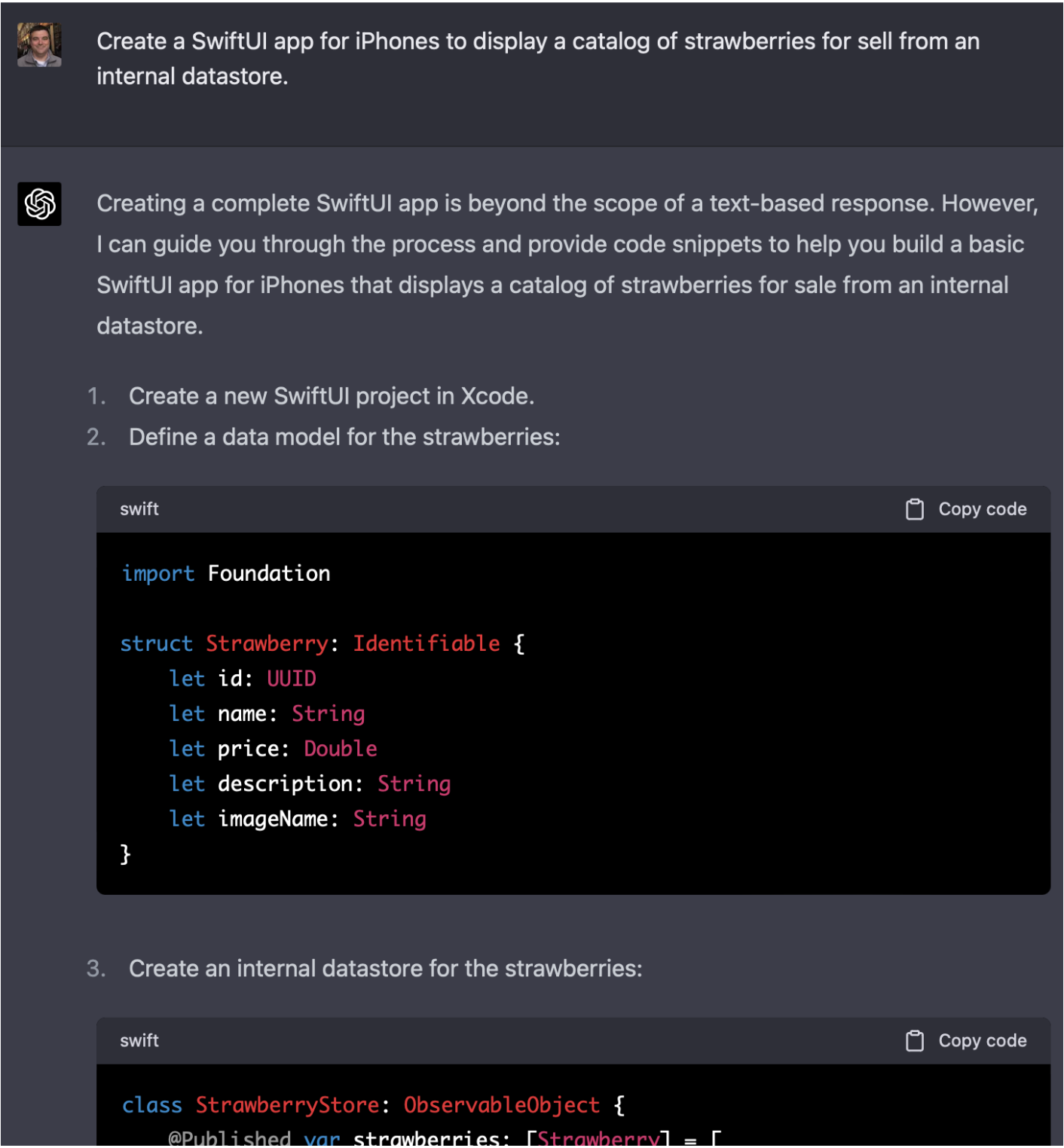

Created using the ChatGPT-3.5 model at openai.com

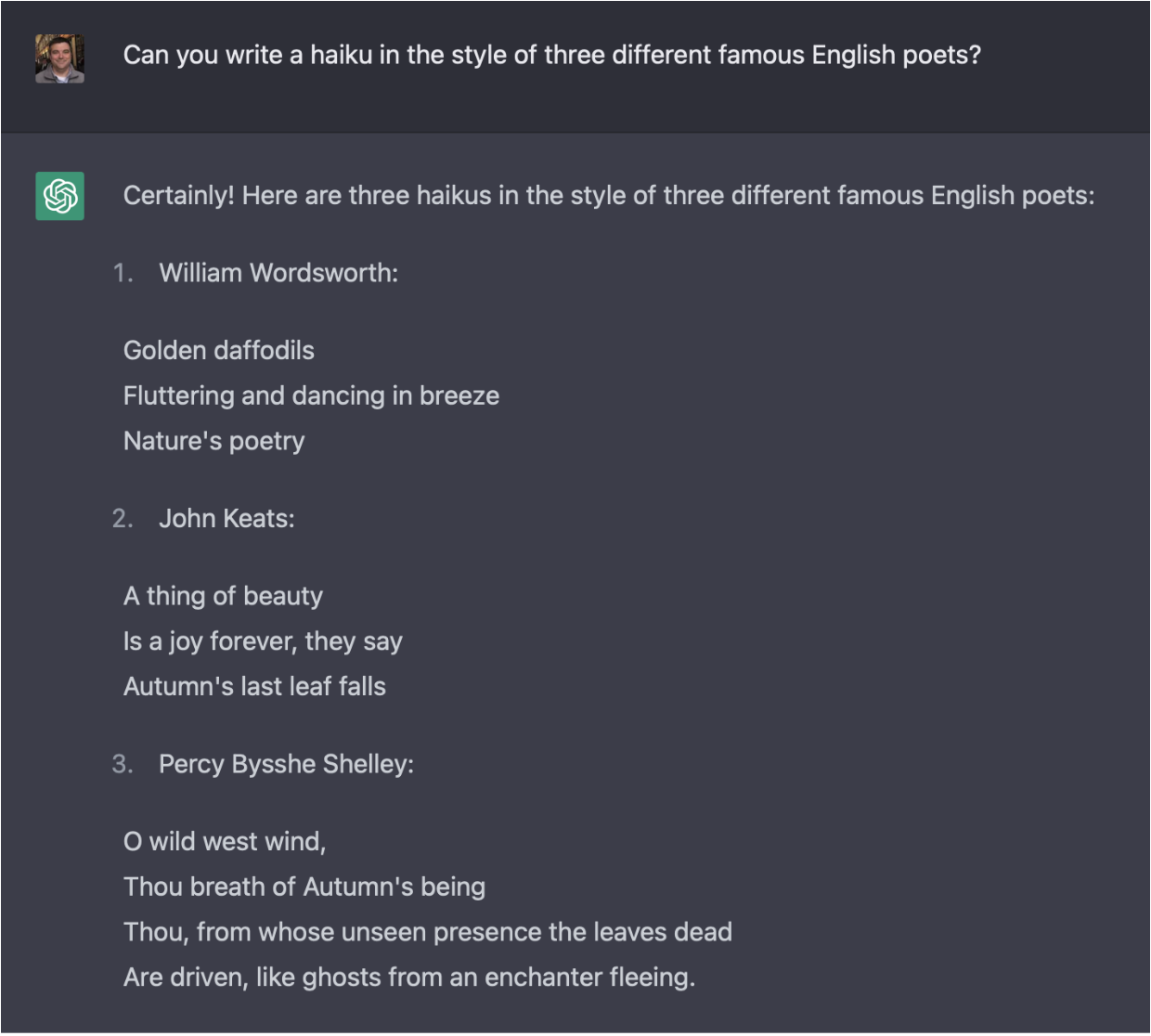

Created using the ChatGPT-3.5 model at openai.com

I work for a wonderful technology company. I spent nearly 25 years of my career engineering software. I even had the opportunity to work on some technology that sometimes felt pretty magical, including the audio recognition technology that sits underneath the Shazam system. I’m still awestruck by it, like the millions of people who use it every day to find out what song is playing in the background at a restaurant or pub. And AI has long been a frequent topic of conversation at the virtual watercooler of the now-common remote workspace. Yet, for the last month, discussion of the latest advancements in AI exhibited in DALL-E and ChatGPT has been almost constant.

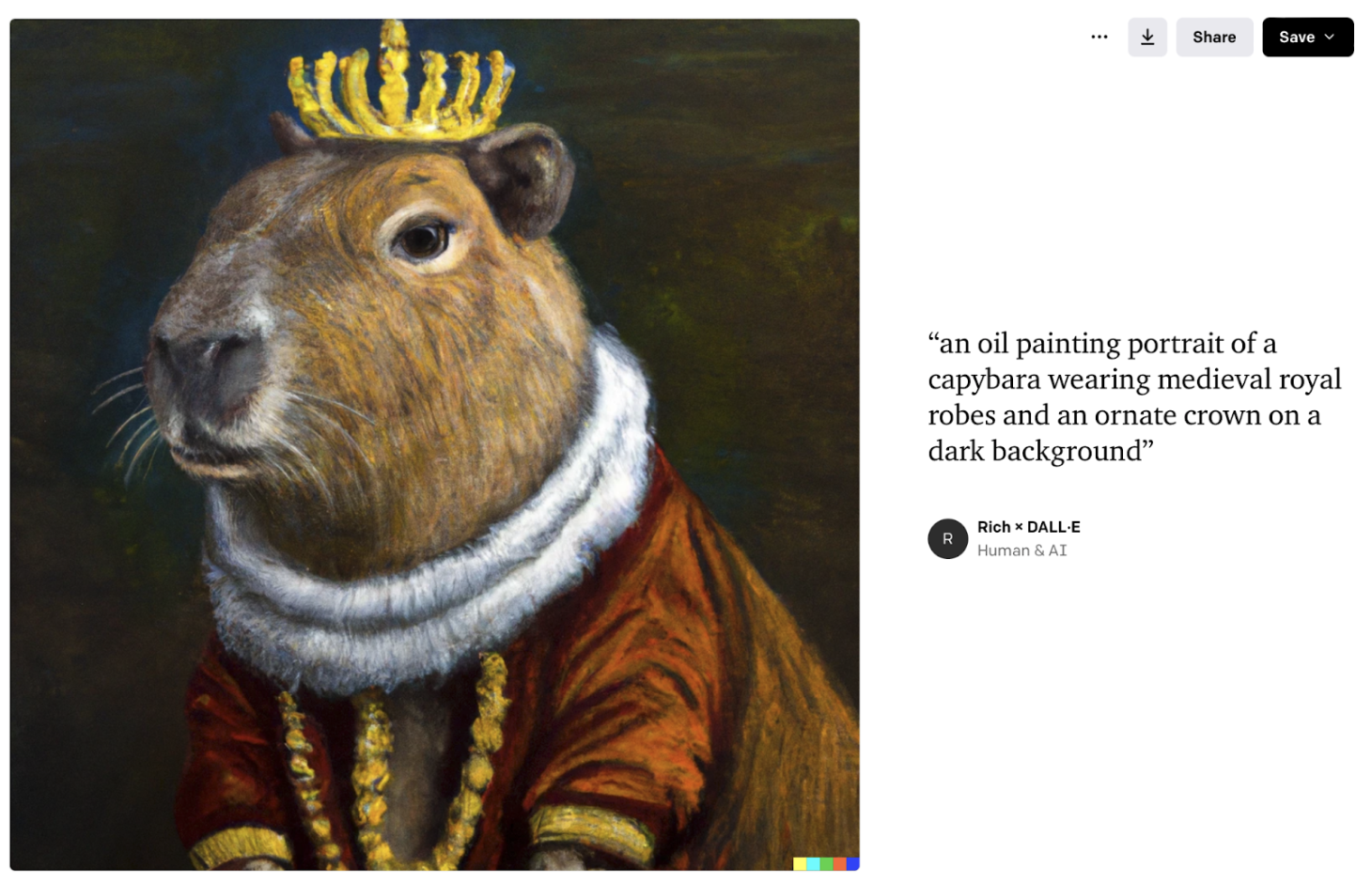

Created using DALL-E 2 at openai.com

Created using DALL-E 2 at openai.com

The potential of AI that is available to pretty much anyone today is truly intoxicating. Whether it is the synergizing of vast amounts of knowledge into a usable form or synthesizing a portrait of Steve Jobs in the style of Renoir, the possibilities are limitless, with potential we haven’t even conceived of yet. A recent popular demo took on a nearly unimaginable task: altering a movie so that it seemed to have been filmed in a different language. The AI was not only able to transform the audio while maintaining the original speaker’s voice but also modified the video presentation of the lips and facial expressions so that it looked like the speaker was actually speaking the substituted language.

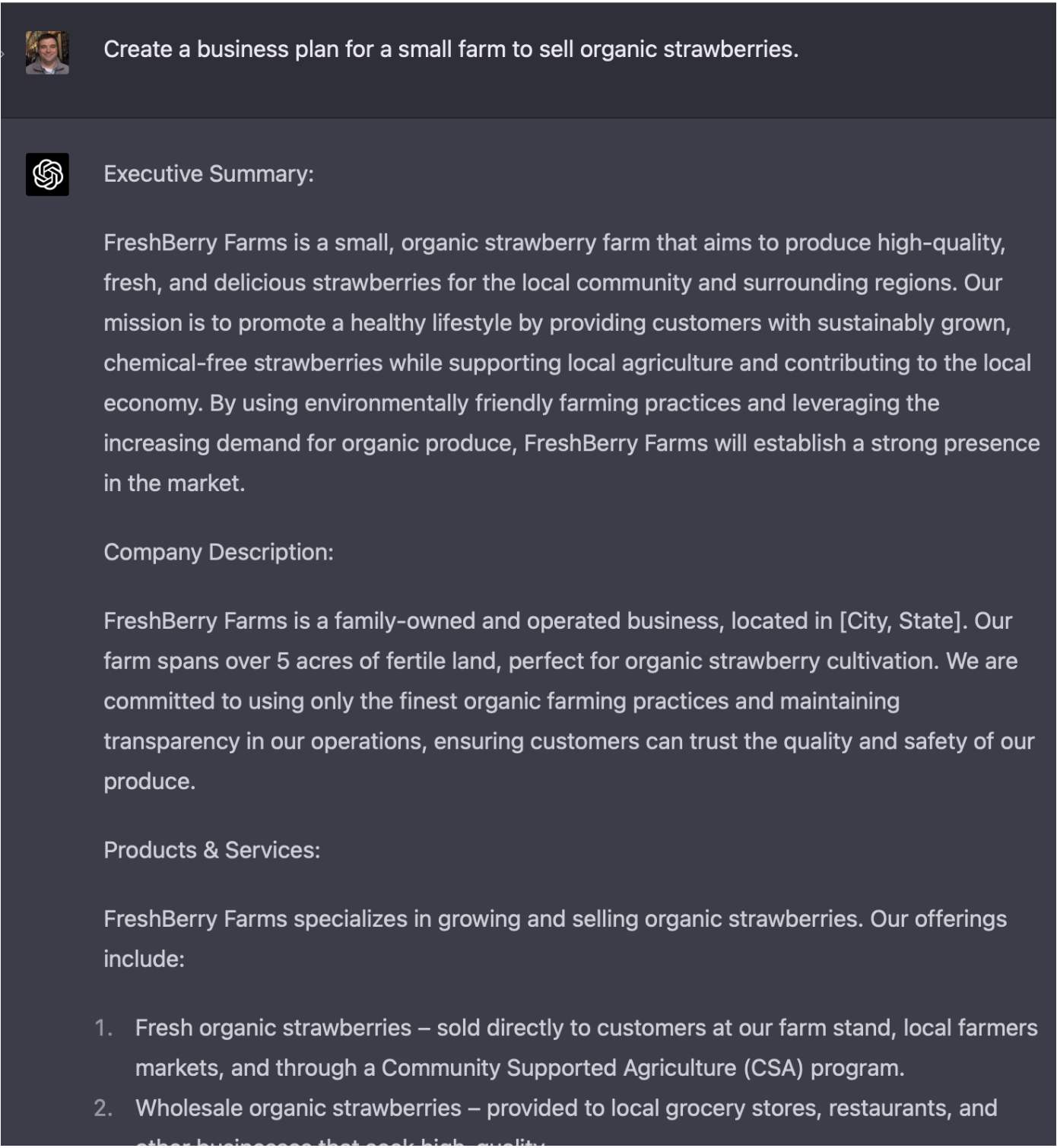

Today, many are using ChatGPT to write contracts, develop marketing, and even write business plans that provide viable company names. Of course, it’s also being used for poetry assignments.

Created using the ChatGPT-4 model at openai.com

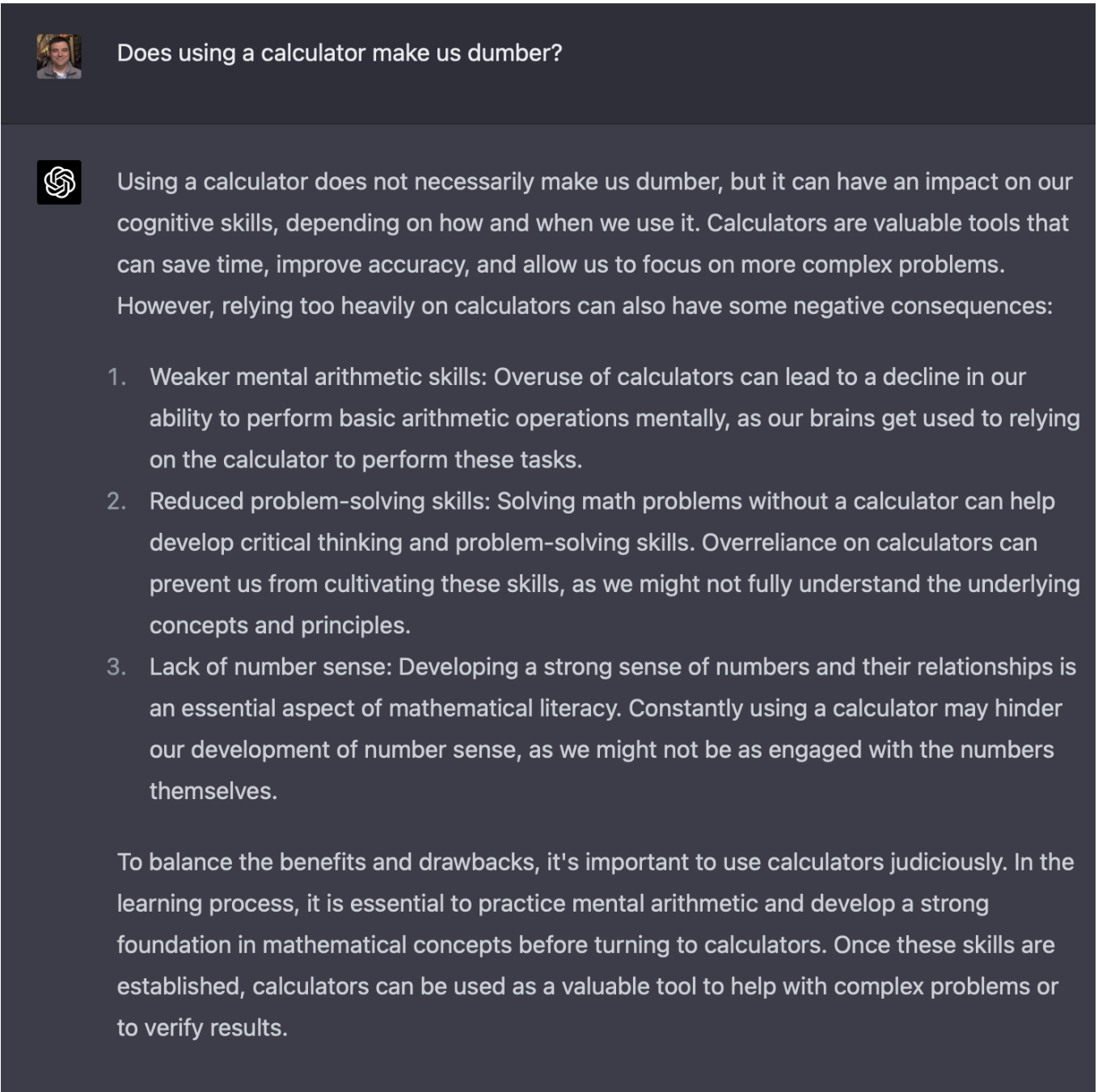

Created using the ChatGPT-4 model at openai.com

Technology almost always brings an increase in productivity, but the potential leap here seems like a whole new order of advancement. ArsTechnica recently reported on an investment bank report that shows an almost unbelievable 7% increase in GDP due to AI over the next 10 years. Of course, an increase in productivity can also bring a loss of jobs: ArsTechnica also noted a recent article from the creator of ChatGPT indicating that 80% of the US workforce could have 10% or more of their work performed by generative AI. Another report shows that nearly 63% of US workers could have up to half of their work replaced by AI, and for 7% of workers, nearly all of their work could be replaced by AI.

We at MartianCraft spent significant time during a recent Lunch & Learn session discussing and investigating possible uses of generative AI. We had ChatGPT write a marketing plan and blog posts and progressively build a mobile iOS application. Could AI do it by itself? No — it required human intervention and the proper inputs. Yet, with an effective driver, the possibilities were stunning.

Created using the ChatGPT-4 model at openai.com

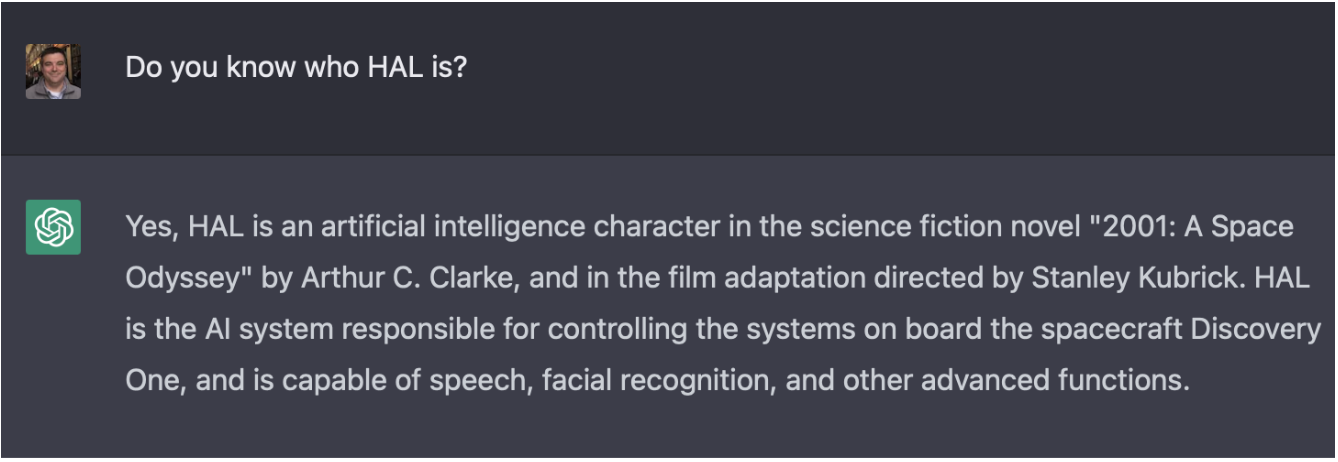

Created using the ChatGPT-4 model at openai.com

Honestly, it can be a bit frightening even for a non-Luddite. Will most businesses choose to maintain employees, or will they seek an increase in productivity and move to cut costs by replacing workers with AI? Maybe even more concerning, if AI can take a video of a person speaking and make that person say or do whatever the AI controller desires in a manner that is undetectable from the original, will we ever be able to prove anything ever again? Video evidence will be completely worthless. I can present video proof of almost anyone on Earth doing or saying anything that I want in a manner that was nearly impossible before today — evidence that was nearly conclusive proof of reality up to this point.

We certainly do live in interesting times. Some say this expression is actually a traditional Chinese curse.

The potential ethical problems of AI are legion. Whether cheating on homework, faking evidence, or replacing human labor, the potential negative impacts are concerning. This doesn’t even touch on the possibility of corrupting AI with bad data or manipulating the results to provide skewed or errant responses.

Returning to my son’s struggle — the poem. The immediate academic impacts of AI seem pretty obvious. Practically any essay topic or creative expression can be created by AI, if it’s given the appropriate direction. The product delivered today is generally indistinguishable to the untrained eye. As I’ve spent more time with ChatGPT, you certainly do begin to see patterns and styles that become recognizable — but even these can be ameliorated to some extent. You can ask ChatGPT to write in the style of a specific person (or yourself, if you have data that has been processed by ChatGPT), or even ask it to regenerate its response in a formal, sarcastic, optimistic, persuasive, or lighthearted tone or using diction at the level of an 8 year old or a PhD student.

Created using the ChatGPT-4 model at openai.com

Created using the ChatGPT-4 model at openai.com

There are incredible systems in place in higher education today to check and verify the work of students against the entirety of the internet to verify plagiarism. Most provide immediate scoring and feedback about the amount of material that matches sources on the internet. Can we ask AI if a student’s submission was created by AI? Per the Washington Post, one verification company is testing an AI solution, but early trials missed some AI content and, worse, incorrectly flagged a student’s original work as AI-generated. The education system will require a significant overhaul to combat cheating in this new AI world.

Yet, the question to be posed might be altogether unexpected — should we even try? Current rules are clear on plagiarism, and current AI provides no citation for its sources. If these sort of issues are resolved, are students just using the tools available to solve the problem at hand? Are verifiable academic resources available on the internet acceptable, if they are properly cited? Do we punish for the general use of a calculator in most contexts? There is a quantifiable difference between a tool that does the entirety of the task and a tool used at the behest of its user, although an argument could be made that AI is just the rough trowel in the hand of the sculptor and the product of the AI is just an intermediary step. These are practical questions that will need to be addressed, just as the use of Google and Wikipedia has changed the educational landscape in the past two decades.

Unfortunately, I think the much more dire ethical concern is epistemological — how we learn and acquire knowledge. While certainly a generalization, I’ve frequently noticed that many of the younger generations struggle today to make change in cash transactions. It seems apparent that most kids today depend on calculators to do basic math. While I have no scientific evidence to support the claim, it seems likely that a dependence on technology has dulled our ability to do the underlying menial tasks.

Created using the ChatGPT-4 model at openai.com

Created using the ChatGPT-4 model at openai.com

Returning to the poetry assignment — if it becomes acceptable or, through cheating, easy to bypass poetry assignments by having AI produce the work, will something be lost? It seems likely that students who use such tools won’t gain the same level of knowledge and ability as those who actually do the work. And maybe poetry isn’t that important of a task to many, but what about potentially more complex tasks?

A reliance on such a powerful and transformative technology could displace the very methods we use to learn. Society may come to the conclusion that it’s acceptable to have AI take over the creation of poetry. Depending on what you value, this may be no great loss — but what if society lost intrinsic knowledge of foundational medical or scientific skills? Again, we might accept that, as long as the job is completed, it’s irrelevant if we lose some cognitive understanding — but this seems deeply troubling.

The concern is the future impact of shortcutting epistemological foundations in human cognitive ability. AI today is very much limited to smartly regurgitating what humanity has already generated. It must work within the confines of existing knowledge and the declarative instructions of its handler. But if we lose our capacity to expand our societal knowledge, epistemological progression stalls, and so does AI. Advances in knowledge and even creative ability will stop, and we will become ever more reliant on AI just to maintain the status quo. We won’t just forget how to make change but also how to write poetry and music or write software or cure new diseases.

Of course, this is but one of the ethical concerns of AI and its ability to manipulate and misinform, to fabricate false realities, or to be weaponized in ways that are hard to even imagine yet. We joke about HAL and Terminator, but many realities today were the fodder of science fiction not far past.

Created using the ChatGPT-4 model at openai.com

Created using the ChatGPT-4 model at openai.com

It should not go without notice that many pioneers in the technological revolution — from Steve Wozniak to Elon Musk — recently published an open letter demanding a pause to all large-scale “giant” AI work at AI labs globally. If OpenAI will not self-regulate, the letter says, “governments should step in and institute a moratorium.” The reasons noted include propaganda and untruth, automation of human jobs — including “the fulfilling ones,” and the risk of loss of control of human civilization. The solutions offered for the pause seem more akin to preventing SkyNet and a real-life Terminator scenario, but include critical aspects of tracking and watermarking AI systems so we can understand the provenance of assets and coping with the economic impacts — to “give society a chance to adapt.”

It makes one ponder recent actions at Microsoft. As ChatGPT and AI are being rapidly integrated into Microsoft’s Bing and other products in a very public manner, Verge recently reported that Microsoft laid off the team responsible for overseeing the ethical aspects of AI. Microsoft claims to continue to maintain an “Office of Responsible AI,” but it’s hard not to be concerned that the very team that was responsible for analyzing and considering the integration of OpenAI tools has been completely gutted. It is also hard not to speculate on the motivations, considering the massive potential financial upside for AI in the next decade.

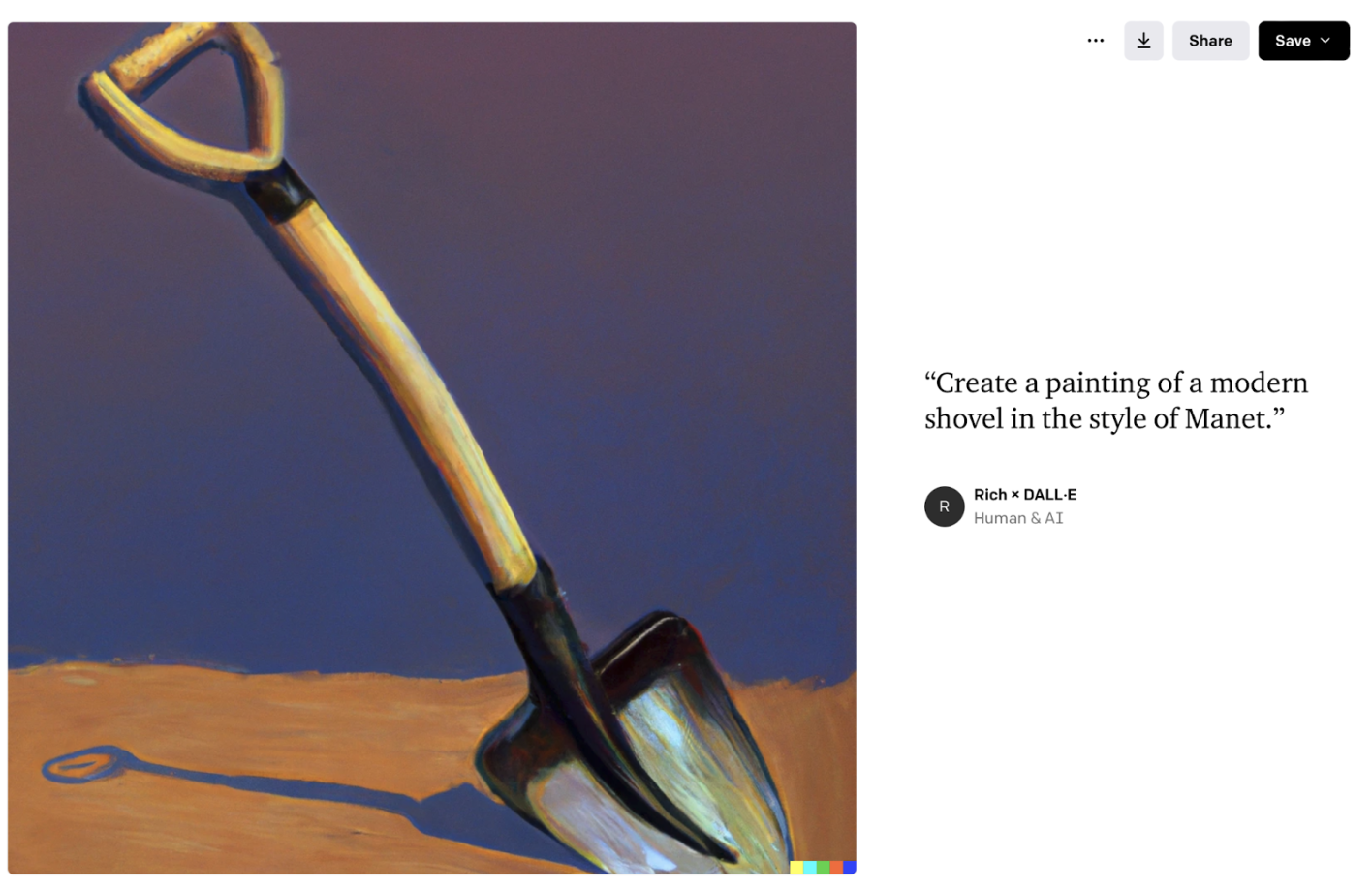

Created using DALL-E 2 at openai.com

Created using DALL-E 2 at openai.com

AI is a tool. While it can be hard to compare to a shovel, a tractor, or a calculator, used responsibly it can empower us to advance society and bring a better standard of living to the world. It can solve problems that are too difficult or expensive to solve otherwise. It can provide cost savings and productivity enhancements to businesses that can drive innovation and advancement. The potential opportunities and benefits are enormous — but with great power comes great responsibility. How do we as a responsible society oversee an ethical and just use of AI in the future?

The advancements available to us will require both experts in the tools themselves — guiding and directing AI in the most effective manner — and perseverance in its ethical use. Without knowledgeable human oversight, AI often produces ineffective or even harmful results. Used maliciously, the results can be all too effective. This requires both training and expertise as well as ethical safeguards.

At MartianCraft, we’re certainly excited about the potential of AI, and we’re educating ourselves about its effective use. We believe that when it’s used properly, much good can be accomplished.

History has shown that humanity most often addresses the ethical issues of innovations after problems fully exhibit themselves. Our next steps today could make the difference between a future akin to Disney’s WALL-E, us being locked out in the coldness of space like our friend Dave, or an embrace of the next positive technological revolution that advances society to new heights. Interesting times indeed!

NOTE: Other than the examples shown in the images in this article, none of this article was generated with AI.

References from this Article